Contents tagged with Style

-

My Font and Color Settings for Visual Studio 2010

One of the first things I do each time I start using a new version of Visual Studio is to customize the font and color settings.

For Visual Studio 2010 this turned out to be a bit more complicated, though, because of the way XML documentation comments are rendered in the new editor.

I like my doc comments to be shown with a background color, but different from earlier versions of Visual Studio, VS2010 will not continue the background color until the right margin, but instead until the last character of the line – which turns out to be kind of ugly.

Fortunately, there’s an extension for that.

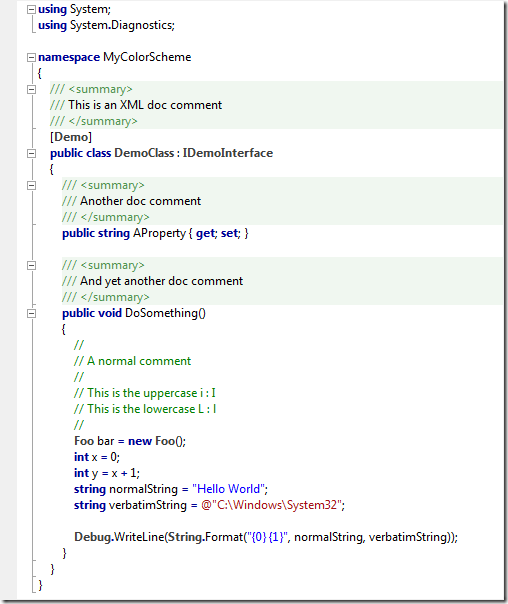

So in order to get something similar to this…

…you’ll need the Background Color Fix extension by Noah Richards. Note that the extension doesn’t always reflect changes you make to the colors immediately; you’ll have to close and open documents or even restart Visual studio. But once you’re set, this is a non-issue.

My personal font and color settings for Visual Studio 2010 shown in the screenshot above can be downloaded here (right-click the link and "Save Target/Link As").

-

Is Your IDE Hot or Not?

Interesting: Devs posting their font and color settings to a website (http://idehotornot.ning.com).

Most of the themes are pretty dark, seems like people either have fond memories of their first PC or watched too many Hollywood “hacker” movies (no offense guys, just kidding ;-). Right now reading the tag cloud is like “tag tag tag tag DAAAHAAAAARK!!!! tag tag tag light” (note that due to the dynamic nature of the website this may no longer be the case at the time you’re reading this).

Long time readers of my blog know that I’m a proponent of proportional width fonts for editing source code, and I like to play around with all the customization features of the IDE (e.g. bold fonts for certain elements). I published the results of my experiments last year a blog post, now I’ve uploaded my settings for Visual Studio 2005 to the “IDE hot or not” website where you can review and rate it.

Update 2007–07–28: The “IDE hot or not” website has downscaled my original image showing the color scheme down to a point where it’s hard too see anything. So I’ll post the image here:

-

Firefox 2.0 : Going Back to 1.5 Style...

Ok, I really tried, but after working with Firefox 2.0 for a few days I still don’t like the Close buttons on the tabs (I know IE7 has them, too, but that doesn’t make things more usable for me personally). Fortunately it’s simple to go back to 1.5 style:

- Enter

about:configin the address bar - Set

browser.tabs.closeButtonto3 - Restart Firefox

And if you prefer the icons of Firefox 1.5, there’s a theme for you on Mozilla.org.

- Enter

-

My Personal Fonts and Colors Settings for Visual Studio 2005

After reproducing my old VS.Net 2003 settings (using a proportional font) on Visual Studio 2005 and playing around with features like bold fonts, I’ve finally settled on fonts and colors settings. Here’s a preview what source code looks like:

Download: FontsAndColors.vssettings.

When you import the settings using the “Import and Export Settings Wizard” (in the Tools menu), please do yourself a favor: Keep the default “Yes, save my current settings” and create a backup of your settings, so you can go back to your old fonts and colors if you want.

-

Why throwing an exception should be the exception

Alex writes about the impact of throwing an exception on performance and that it may not be as bad as one may assume. In the comments, people write that using exceptions for flow control is not the Right Thing to do.

Here’s my #1 reason why throwing an exception for flow control is definitely not a good idea: Debugging. When I’m debugging and I’ve set the debugger to “break on CLR exceptions” (and no, I don’t want to get more specific when I switch that option on and off dozens of times per day), I don’t want code execution to halt over and over again until I get to the actual problem.

It’s bad enough if some exceptions in non-exceptional situations cannot be avoided — I don’t need to introduce them in my own code.

-

Raising C# events doesn't feel right (and seems to have problems too)

[This blog post was inspired by reading “Events, Delegate and Multithreading” by Brad Abrams.]

I'm pretty sure the language designers thought about the whole thing way longer than I did, but from the very beginning raising events simply didn't "feel right". I’m not talking about the pattern of using “On…” methods — while that was something I (with an extensive Javascript background) had to get used to, it was a typical case of “what? whose idea was that? hmm, that’s not so dumb after all… hey, good idea!”.

No, it’s the way of actually raising the event inside the “On…” method. In the blog post mention above Eric Gunnerson is quoted “We have talked about adding an Invoke() keyword to the language to make this easier, but after a fair bit of discussion, we decided that we couldn't do the right thing all the time, so we elected not to do anything”. Well, but that’s exactly what I’m missing: a keyword. While I don’t miss much from VB6 (ok, maybe the command window), I really miss the good ol’ RaiseEvent.

When you look at source code, keywords are something that catch your eye. While you are bombarded with lots and lots of words (names of classes, variables, methods, etc. ), keywords like “delegate”, “event”, “throw”, “override” (man, I’m thankful for that one!) stand out — not only because of the coloring inside the editor, but also because of the different syntax compared to lines and lines of “variable = Method(parameter)”.

For example throwing an exception:

throw new FooException("bar");It says “throw” and you can virtually see some referees throwing a yellow flag.

If I declare a delegate “FooHandler” and an event "Foo"

public delegate void FooHandler(object sender, FooArgs e);

public event FooHandler Foo;then one can argue the overall approach (is it too complicated yes/no?), but nevertheless it’s quite clear what the code is trying to tell me: This is a delegate, and this is an event, and there seems to be some connection between those two (because FooHandler appears in both).

But then comes the big letdown: the code for raising the event. Until recently I would have used

protected virtual void OnFoo(FooArgs e)

{

if (Foo != null)

Foo(this, e);

}Now I’ve read the recommendation to use

protected virtual void OnFoo(FooArgs e)

{

FooHandler handler = Foo;

if (handler != null)

handler(this, e);

}Sorry, but this doesn’t survive my personal test for “is the important stuff easily discoverable”: Step back from the monitor, squint your eyes until things look kind of blurry, and then collect all information you can get from what you see with a quick look. For fun, try it with the code example. While “throw new blah blah” and “public event blah blah blah” give you a rough idea of what’s happening, you have to look again to find out what “protected virtual blah handler handler blah handler null blah this” is all about. To avoid misunderstandings: This is not about understanding a programming language without learning it. What I’m talking about is browsing code really fast, looking at each line only fractions of a second, and not actually thinking about the code, but just matching some basic patterns inside your brain — stuff we’re all doing automatically when scrolling up and down while editing text.

Ok, this post is longer than it should be, so let’s cut this whole thing short and get to the point:

Comparing raising an event with the way how to throw an exception, and keeping in mind that the official wording (according to the Design Guidelines for Class Library Developers) is that exceptions are "thrown" and events are "raised", I would personally prefer:

protected virtual void OnFoo(FooArgs e)

{

raise Foo(this, e);

}What should the “raise” keyword do internally? Let me play the role of the unfair customer here: I don’t care, just make it work. I want to raise an event and that should work without the danger of a race condition or whatever.

-

The Power of First Impression

After months of ZIPping up the GhostDoc source files every now and then, I finally have a source code management solution running (better late than never ;-). I've built and set up a server from spare parts, mainly to be used for storing files (e.g. backups and VMWare images), on which I installed SourceGear Vault. Using SourceSafe at work, this was an obvious choice for me. Usually I don't shy away from learning new things, but this time I didn't want to invest time to learn to use a system with a different philosophy (like e.g. SubVersion).

Now I have to admit a few things: While there are quite a few things I'm really good at, I have no or only superficial knowledge when it comes to administering/configuring

- databases

- IIS

- ASP.net applications

So this is me, not wanting to invest a lot of time, and I want to install a product that

- uses a database (MSDE in my case, which I have never installed before)

- is running on IIS

- is an ASP.net application

And you know what? I got things running without any problems worth mentioning. The combination of a good installer (e.g. no editing of configuration files required) and an installation guide on the product's website with lots of screenshots enabled me to install this product. Creating a repository was also pretty easy and just a matter of minutes.

So I'm happy to report that GhostDoc is now under source control.

Maybe it turns out that I just installed the worst product imaginable. Maybe things go wrong from now on in every imaginable way. But right now I trust this product. It just feels right. From the splash screen (nice, but not too fancy) up to the help file (not really spectacular, but it was able to answer the few questions I had). Everything made good first impression.

Now there's another application I installed on the server (I will not mention the name here, as it would be pretty unfair). When I start it, I get a "SqlException: SQL Server does not exist or access denied" error message. I couldn't find what to do in the documentation, the product's website does not have a FAQ, there's no forum, only a email address I can write to. This is what I'll to, because I'm pretty sure that once things are running, the application will be what I was looking for. But I guess you agree that the first impression is spoiled.

As a developer I know that I'm completely unfair here, but as a customer (even though in both cases I don't have to pay as a single user) I don't care about being fair. Whether you like it or not, the first impression plays a vital role how users view your software.

So do yourself a favor: When you release software (even if it's just a small tool), invest enough time in what could be called "the most important minutes" of your program. After a couple of months since the release of GhostDoc, I'm happy to notice people praise the "out of the box experience". Let me tell you it didn't come easy. Things weren't perfect from a start and a lot of time and testing went into getting things right. I actually bought VMWare Workstation especially for testing GhostDoc on different system configurations (virtual machines simply rock, period). Did all the effort pay of? After all, I could have implemented lots and lots of features, so GhostDoc would produce less funny results in certain situations and would be as useful as I know it could be. But all I can say is yes, it was worth the effort. GhostDoc was downloaded a bit more than 1000 times (all versions combined) and while this may not be too impressive, I'm proud that I didn't receive a single question about the first steps. I receive bugs and feature requests regarding the text generation, people wanting to tweak things, people wanting to code their own rules, but not a single of those "I can't get it to run, what now?" questions that quickly eat away lots of time from actually developing software.

-

My Transition from Fixed to Proportional Width Fonts for Editing Source Code

I always find it interesting to look at source code I wrote years ago. Old dot-matrix printouts (BASIC and Z80 Assembly code from the 80's...) found in a drawer while searching for something else. Or source files in some remote "OldStuff" folder on my storage hard drive.

These old sources bring back lots of memories and it's nice to see the evolution of my personal style, which shows several cycles of first changing only gradually for some time, then suddenly getting a visible boost after being exposed to new concepts and ideas.

One thing that really amazes me in my source files back from the 90's is the amount of time wasted on formatting and indentation (sometimes up to the point of thinking "wow, did I do that?"). I'm speaking of things like comment headers for methods:

/******************************************************

* Method: CreateItem

* Purpose: Creates a new foobar item using the

* specified ID

* Parameter: id = ID of the item

* Returns: created item

******************************************************/Or end-of-line comments. Often I can literally see code statements and comments fighting for space, with the comments eventually losing and being split to two or more lines:

splurbyUpdateBits=noobahFlags & pizkwatMask; // a comment explaining what

// this line is all about,

// spanning several linesAnd another waste of time: lining up variable names and comments in declarations:

int n=0; // A comment

SomeType theItem; // Another commentA few years ago I started experimenting with proportional fonts for the source code editor, being inspired by screenshots of Smalltalk and Oberon systems I saw in magazines. After finding out that Verdana (unlike Arial) is well-suited as a developer's font, displaying the uppercase I, the lowercase L and the pipe symbol differently, I forced myself to use this font for a while.

The increase in readability was amazing (especially for long identifiers), but my exisiting source code was a mess when viewed in the editor - all elaborate formatting using tabs and spaces was gone. Not wanting to go back, I had to adjust my commenting and formatting style:

- I chose a less elaborate design for comments headers (which I won't reproduce here as it was C/C++ specific; now using C# with its XML documentation comments I never felt the need for exactly lining up anything)

- Wrapping end-of-line comments was no longer an option. Sure, you can line up the comments in your editor using tabs, but in other people's editors (using a different font) the result is unpredictable. So I reduced the need for EOL comments

- by moving some information to section comments that explain the intention of several code lines, and

- by writing better code (yeah I know, d'oh!), as long EOL-comments usually are an indicator for bad/complicated parts

- I simply stopped formatting variable declarations. Maybe it was a nice thing to do in plain C, but in C++, Java and C# long lists of variable declarations are much less common anyway. What benefited most from lining up were the end-of-line comments behind variable names. But with the switch to a proportional editor font (supported by the Intellisense feature) the length of my variable names grew considerably, and with more expressive names, most comments were no longer required. Now, the few remaining comments don't need to be lined up.

I'm now using a proportional editor font (Verdana 8pt) for quite some time and I wouldn't voluntarily change back to a fixed-width font. At the same time it must be noted that the transition may not be without problems, depending on your current personal style. But I can definitely recommend giving it a try.

-

Don't Underestimate the Benefits of "Trivial" Unit Tests

Take a look at the following example of a trivial unit test for a property. Imagine a class

MyClasswith a propertyDescription:public class MyClass

{

...

private string m_strDescription;

public string Description

{

get { return m_strDescription; }

set { m_strDescription=value; }

}

...

}And here's the accompanying unit test:

...

[ Test ]

public void DescriptionTest()

{

MyClass obj=new MyClass();

obj.Description="Hello World";

Assert.AreEqual("Hello World", obj.Description);

}

...Question: What's the benefit of such a trivial test? Is it actually worth the time spent for writing it?

As I have learned over and over again in the past months, the answer is YES. First of all, knowing that the property is working correctly is better than being "really, really sure". There may not be a huge difference in the moment you write the code, but think again a couple weeks and dozens or hundreds of classes later. Maybe you change the way the property value is stored, e.g. something like this:

public string Description

{

get { return m_objDataStorage.Items["Description"]; }

set { m_objDataStorage.Items["Description"]=value; }

}Without the unit test, you would test the new implementation once, then forget about it. Now another couple of weeks later some minor change in the storage code breaks this implementation (causing the getter to return a wrong value). If the class and its property is buried under layers and layers of code, you can spend a lot of time searching for this bug. With a unit test, the test simply fails, giving you a pretty good idea of what's wrong.

Of course, this is just the success story... don't forget about the many, many properties that stay exactly the way they were written. The tests for these may give the warm, fuzzy feeling of doing the "right thing", but from a sceptic's point of view, they are a waste of time. One could try to save time by not writing a test until a property's code is more complicated than a simple access to a private member variable, but ask yourself how likely it is that this test will be written if either a) you are under pressure, or b) somebody else changes the code (did I just hear someone say "yeah, right"? ;-). My advice is to stop worrying about wasted time and to simply think in terms of statistics. One really nasty bug found easily can save enough time to write a lot of "unnecessary" tests.

As already mentioned, my personal experience with "trivial" unit tests for properties has been pretty good. Besides the obvious benefits when refactoring, every couple of weeks a completely unexpected bug gets caught (typos and copy/paste- or search/replace mistakes that somehow get past the compiler).

-

Looking for a New Year's Resolution?

There are many exciting .NET topics waiting for us in 2004. Simply too much stuff to be tried and understood, too much knowledge to be gained. If you're making plans for 2004, here's one single thing I can fully recommend:

If you're not already doing it, start writing unit tests using a test framework.

Unit testing is one of these "I really should be doing this" concepts. Even though I read and heard about unit testing years ago, I only started doing it in 2003. Sure, I always had some kind of test applications for e.g. a library - who hasn't written one of those infamous programs consisting of a form and dozens of buttons ("button1", "button2", ...), each starting some test code. And it's not as if my software was of poor quality. But looking back, unit testing was the thing in 2003 that made me a better developer.

I'm using NUnit for my unit tests; it's so easy to use that a typical reaction of developers being introduced to NUnit is "What? That's all I have to do?". To get started, visit this page on the NUnit website, and follow the steps in the first paragraph "Getting Started with NUnit".

When I began writing unit tests in early 2003, I wrote tests for existing code. If this code (e.g. a library) is already successfully in use, this can be pretty frustrating, because the most basic tests are all likely to succeed. My first tests where pretty coarse, testing too much at once - maybe because the trivial tests (e.g. create a class instance, set a property and test whether reading it has the expected result) seemed like a waste of time.

In the course of time I moved more towards "test driven development", i.e. writing tests along with the code, often even before the implementation is ready. Now, if I create a new project, I always add a test project to the solution. This way my code and the corresponding tests never run out of sync. If I make a breaking change, the solution won't compile - it's that easy.

If you take this approach (writing test very early), even testing the most basic stuff can be pretty rewarding:

- Sometime typos or copy/paste mistakes are not caught by the compiler (e.g. when the property getter accesses a different member variable than the property setter) - one bug like this found by a unit test written in 5 Minutes can save you hours of debugging through a complete application.

- It's a very good test for the usability e.g. of your API. If it turns out that even a simple task requires many lines of code, you definitely should re-design your API (which is less of a problem at that early stage of development).

- The unit tests is some sort of documentation of how your code is used - don't underestimate how helpful this can be (by the way: I wrote a simple tool for generating examples for online documentation from unit tests).

- Last but not least: I know that some of the unit-testing folks don't like debuggers, but fine-grained unit tests are very good entry points for single stepping through your code.

So... what about a New Year's Resolution to start writing unit tests?